nvolt - your ai knowledge vault

A couple of hours, a couple of coffees, and a couple of nerds – that’s all it took to spin up a little PoC called nvolt. This time, I wasn’t alone: Soma (hargitaisoma.hu) joined the fun, and together we built something that scratches a very specific itch.

# The itch: PDFs, but smarter

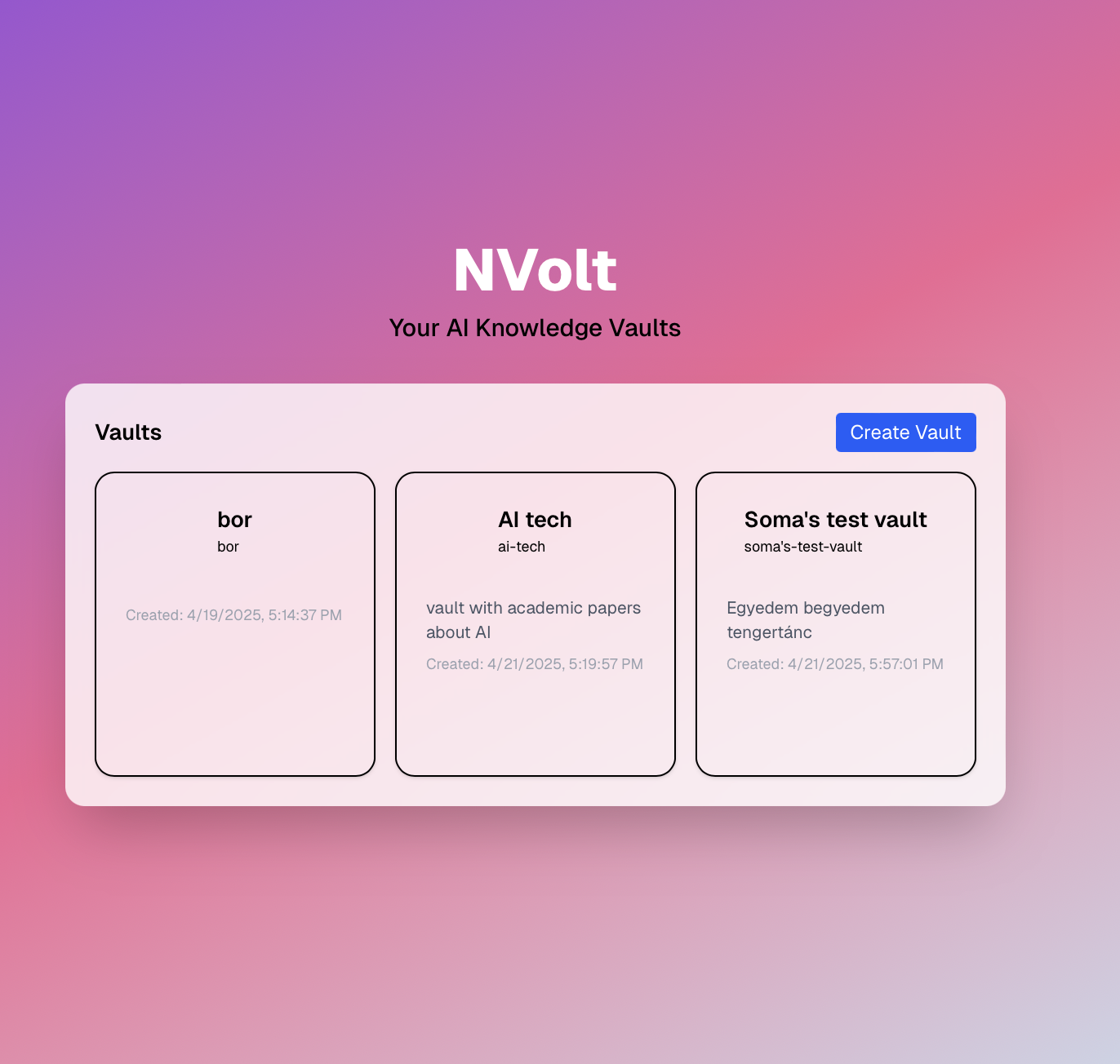

You know the feeling: you download a 60-page PDF, and all you want is to ask it a question. Not read it, not Ctrl+F, not scroll through endless pages. Just: “Hey, what does this thing say about X?”. That’s what nvolt does. You upload a PDF, and instead of a boring summary, you get to chat with it. Ask anything, get answers grounded in the actual document. Like a chatbot, but it actually knows what it’s talking about (because it’s read your file).

# How it works (the short version)

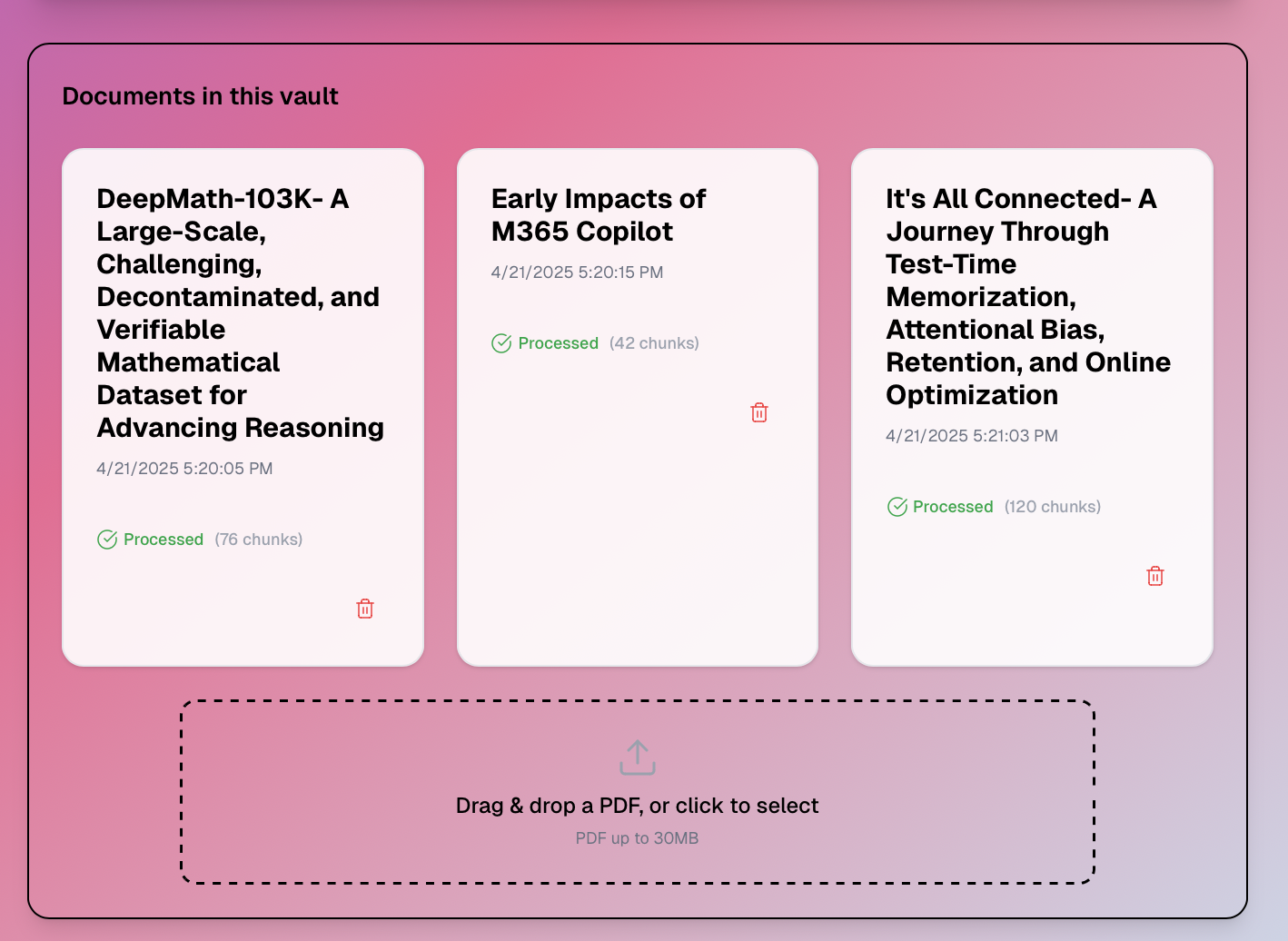

- Upload a PDF

- The backend chews through it, splits it up, and creates vector embeddings (think: mathematical fingerprints for each chunk)

- Everything gets indexed in a vector database (chroma)

- You ask a question in the chat

- The system finds the most relevant bits, feeds them to GPT-4o, and you get a contextual answer – with references

All this happens in seconds. The tech stack is a bit of a kitchen sink: FastAPI, Langchain, OpenAI, ChromaDB, Next.js, Tailwind, shadcn/ui, and a bit of Python glue.

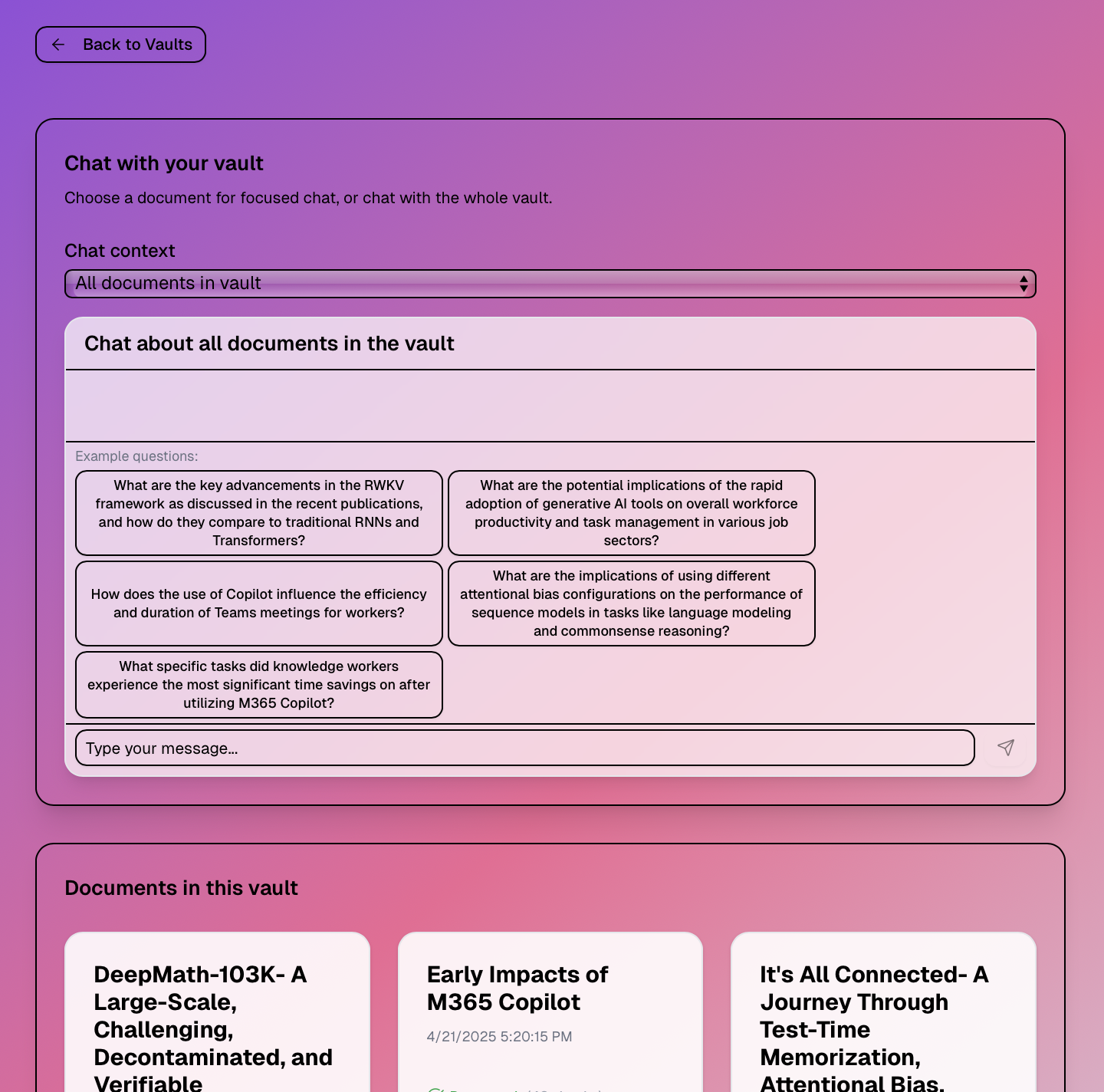

# Vaults: because one PDF is never enough

Originally, nvolt was just about single documents. But then we thought: what if you want to group a bunch of PDFs together? Enter Vaults. Now you can create a vault (think: a collection), upload multiple documents, and chat across all of them. Each vault gets a unique, shareable URL. You can organize, share, and query collections – not just files.

Under the hood, this meant adding a new data model (vaults, with slugs for URLs), updating endpoints, and making sure vector search works across all docs in a vault. The frontend got new pages for vaults, and the chat interface now works in the context of a whole collection. (Migration? Old docs just got assigned to a default vault. Easy.)

# Why bother?

Honestly, this was just a fun weekend project. But it feels like a glimpse of how we’ll interact with documents in the future: not by reading, but by conversing. And it was a blast to build something end-to-end with Soma, from the first “what if…” to a working demo.

If you want to try it, or just poke around the code, let me know. Or better yet, build your own version. The future is chatty – even for PDFs.